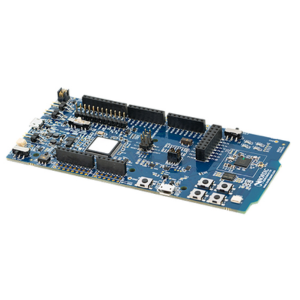

The Coral USB Accelerator is a hardware device developed by Google as part of their Coral project. It is designed to provide on-device AI (artificial intelligence) inference for a variety of edge devices, including single-board computers like the Raspberry Pi and other embedded systems. The on-board Edge TPU is a small ASIC designed by Google that accelerates TensorFlow Lite models in a power efficient manner: it’s capable of performing 4 trillion operations per second (4 TOPS), using 2 watts of power—that’s 2 TOPS per watt. For example, one Edge TPU can execute state-of-the-art mobile vision models such as MobileNet v2 at almost 400 frames per second. This on-device ML processing reduces latency, increases data privacy, and removes the need for a constant internet connection.

AI Acceleration: The USB Accelerator is equipped with Google’s Edge TPU (Tensor Processing Unit), which is optimized for running machine learning models efficiently. It accelerates AI inference tasks without the need for a cloud connection, making it suitable for edge computing and privacy-sensitive applications.

USB Connectivity: It connects to a host device, such as a computer or single-board computer, via a USB interface. This enables easy integration into a wide range of hardware platforms. Compatible with USB 2.0 but inferencing speed is slower.

Edge Processing: The device allows you to run machine learning models directly on the edge device, reducing latency and bandwidth usage, and improving real-time processing capabilities for AI applications.

Supported Frameworks: The Coral USB Accelerator is compatible with TensorFlow Lite, a popular machine learning framework, making it accessible for developers already familiar with TensorFlow.

Versatility: It can be used for various applications, including image and video classification, object detection, speech recognition, and more.

The Coral USB Accelerator is designed to bring AI capabilities to a wide range of edge devices, making it easier for developers and hobbyists to implement machine learning applications without relying on cloud-based solutions. It is part of Google’s broader Coral ecosystem, which includes development tools, software libraries, and pre-trained models to facilitate AI development at the edge.

Features:

- Easy to use

- Easy to connect

- Performs high-speed ML inferencing: ML accelerator Google Edge TPU coprocessor: 4 TOPS (int8); 2 TOPS per watt. The on-board Edge TPU coprocessor is capable of performing 4 trillion operations (tera-operations) per second (TOPS), using 0.5 watts for each TOPS (2 TOPS per watt). For example, it can execute state-of-the-art mobile vision models such as MobileNet v2 at almost 400 FPS, in a power efficient manner. See more performance benchmarks.

- Supports all major platforms: Connects via USB to any system running Debian Linux (including Raspberry Pi), macOS, or Windows 10.

- Supports TensorFlow Lite: No need to build models from the ground up. TensorFlow Lite models can be compiled to run on the Edge TPU.